What AI in Finance Teaches Us Beyond Code

- X —iO

- Jul 14, 2025

- 7 min read

Updated: Sep 2, 2025

AI in Finance | What is AI? Implementation | Adoption | Use cases | Challenges

As part of my mentorship at AI-Talents, I had the opportunity to explore how AI is radically transforming the finance world — not in the future, but right now. From fraud detection to personalized banking, we’re witnessing a technological shift that demands not only technical precision but also ethical responsibility and strategic foresight. Artificial Intelligence is no longer experimental in the financial world — it’s instrumental. Yet, as AI reshapes the way institutions assess risk, serve customers, and drive revenue, it also exposes a deeper truth: AI must be implemented with a strategy, ethics, purpose, and not the least as soon as possible to remain in the game. But one element determines the success or failure of any AI implementation: data quality. Without clean, complete, and well-structured data, even the most advanced AI models become unreliable, leading to flawed decisions and increased risk. This series dives into what banks and fintechs are getting right — and wrong — and how we can learn from them.

Why Data Quality Can Make or Break AI in Finance

Poor data isn’t just a technical issue — it’s a strategic liability. In finance, where precision is critical, flawed data can lead to biased credit scoring, inaccurate fraud detection, and misleading customer insights. Generative AI models trained on noisy or unrepresentative datasets may produce outputs that are irrelevant or even harmful — a phenomenon known as “hallucination.” In a regulatory-heavy environment, decisions made on bad data could mean reputational damage or legal consequences. That’s why data governance and validation processes must evolve hand-in-hand with AI adoption.

What is AI?

In easy words, AI (Artificial Intelligence) is the ability of computers or machines to perform tasks that usually require human intelligence — like understanding language, recognizing images, or making decisions.

The European Central Bank developed the following taxonomy:

Rule-based Algorithms– follow clear instructions (like “if this, then that”).

Machine Learning (ML) – learns patterns from data and improves over time, especially through artificial neural networks (ANNs), which are inspired by how our brains work. This branch is the foundation of the Large Language models that shape today's AI landscape

Today’s most powerful AI systems are called foundation models — they’re trained on massive amounts of data (like text, images, and sounds) and can generate new content. This is called Generative AI, and it powers tools like ChatGPT.

Important: These models don’t really “understand” like humans do — they predict what comes next based on patterns, not real comprehension, giving room to so-called “hallucination”.

AI use by Industries and Function, 2024

In 2024 the highest adoption of AI includes the Financial Services Industry and in particular the Service Operations function.

Adoption on AI in Finance

recent academic study analyzing the U.S. Census tech surveys found that banks using AI grew from 14% in 2017 to 43% by 2019 alone.

More recent data suggest that 54% of all customer interactions in U.S. banks are now fully automated using AI-driven systems.

In a lecture, Prof. Georg shared with us the following interesting numbers based on the fields of application:

AI is driving measurable improvements in finance: 66% of banks report performance gains in risk assessment, and 77% of financial firms use AI tools to manage financial risks. AI-driven fraud detection identifies over 90% of fraudulent transactions (vs. 70% with traditional methods) and reduces fraud detection costs by 30%. JPMorgan saw a 20% increase in asset and wealth management sales (2023–2024) thanks to AI, while LLMs like OPT achieved 74.4% accuracy in predicting stock returns through sentiment analysis of financial news.

Banks & Pre‑Trained AI (GPAI):

GPAI : General Pre-trained AI refers to a large AI model pre-trained on massive, general-purpose datasets and can then be fine-tuned or adapted for many downstream tasks. It is primarily used in language- or document-heavy functions like chatbots and internal tools to reduce the risk of providing misinformation or hallucination.

This is not an afterthought for EU financial institutions — it's becoming core to their operations. According to the European Banking Authority, as of autumn 2024, about 40% is implemented for optimization of internal processes and customer support in EU banks.

These aren’t tech gimmicks — they’re mission-critical systems aligned with companies’ goals, regulatory goals, and customer trust.

Gen AI/LLMs & RAG in Banking

Like most of us are familiar with, gen AI is focused on creating new content like text, images, code, or music, and is often built on GPAI, a pre-trained model which acts like the brain for tasks like classification, summarization, translations, and conversational agents.

RAG – Retrieval-Augmented Generation: a system architecture, not a model. It retrieves real-world data (from documents, websites, databases) and feeds it into a Generative AI model to improve the relevance and accuracy of answers. This is often used in Chatbots for up-to-date infos, legal or financial assistance grounded in private docs, or even enterprise copilots. It is built on GPAI with retrieval systems. In a few words, it creates content with better accuracy reducing hallucination.

⚠️ Based on my understanding, a simplified version without the complexity of GANs, NLP, RPA, RL, HFT, XIA, GANs:

→ The foundation models used in GenAI are LLMs

→ These LLMs are built using transformer architecture: Invented by Google in 2017 (paper: Attention Is All You Need , funny name btw coming from eight scientists).

→ They are pretrained on massive datasets before being fine-tuned for tasks like chatbots, content creation, etc.

→ Now RAG enriches the information giving fact-based, context-aware outputs.

→ And then come the layers of automation, real-time trading, speed, and safety.

Training a large language model (LLM) from scratch can cost millions. Since data can quickly become outdated, it needs regular retraining as new information becomes available. This process also demands clean, well-organized initial data, so before training can even begin, the dataset must be carefully reviewed and prepared.

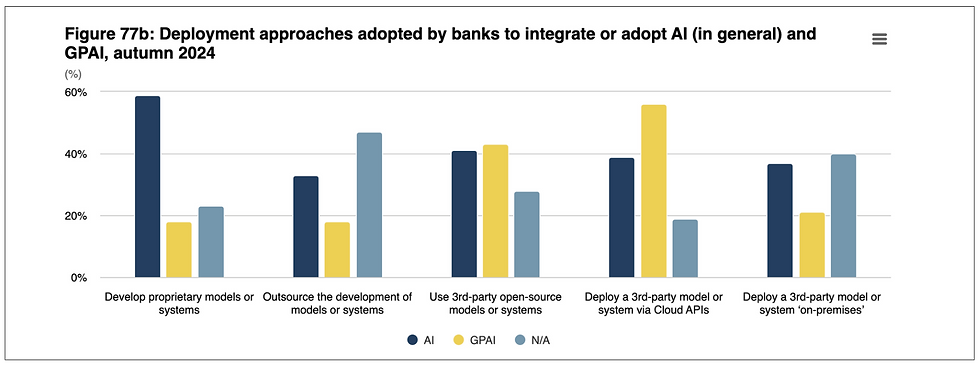

Is the AI Developed In-House or via Third-Party Platforms?

AI development approaches vary, and flexibility is key:

Some firms build in-house to maintain data control and compliance

Others use third-party or cloud-based APIs to scale faster

Many now use hybrid methods like fine-tuning open-source LLMs or integrating RAG pipelines (Retrieval-Augmented Generation, a system that combines a retriever, which finds relevant information, and a generator, which creates a response to answer questions based on a knowledge base.)

Strategic factors that determine the choice are : cost, internal skills, vendor lock-in, and scalability.

There is no “one-size-fits-all” — the AI development path must align with each firm’s resources, risk appetite, and innovation goals.

Measurable Outcomes from AI Use

The results speak volumes:

→ JPMorgan reported 1.5 billion in fraud prevention and a 20% boost in asset management sales.

→ Mastercard’s newest iteration of its AI-powered fraud detection system features AI technology that scans nearly 160 billion transactions every year, detecting fraud in 50 milliseconds.

→ Klarna slashed customer service response times from 11 to 2 minutes

→ Commonwealth Bank halved scam losses and cut call center wait times by 40%

These outcomes show AI delivering real value in: Operational efficiency, Fraud detection, Revenue growth, Customer experience improvement. When aligned with core business functions, AI is not just helpful — it’s transformative.

AI Use Expansion

Top European institutions are investing forward and partnering up with the big players in the game.

UniCredit: 10-year partnership with Google Cloud for transformation across 13 markets

Euroclear: 7-year Microsoft deal to modernize market infrastructure with AI

NatWest: Collaboration with OpenAI to upgrade customer and staff assistants

EU Commission: EU launches InvestAI initiative to mobilise €200 billion of investment in artificial intelligence

The next AI frontier isn’t about more tools — it’s about deeper integration, ethical use, and strategic partnerships.

Challenges in Adoption & Scaling

Even the best strategies face barriers. Among the most pressing are:

→ Regulatory complexity (AI Act, GDPR)

AI Act Compliance

Data Privacy Concerns

→ Talent shortages in GenAI expertise

Limited Expertise

Training Needs/ Early adoption

→ Technical and Operational Challenges

Data Quality Issues

Integration Difficulties

→ Vendor Dependency & Limitations

Reliance on Big Tech

Infrastructure Constraints

→ Customer backlash when automation lacks empathy (Klarna’s case)

Scaling AI demands not just code, but governance, education, and humility. Tech-first without user-first is a short-lived victory.

Lessons Other Finance Firms Can Learn from These Cases

In finance, AI is not just about faster decisions — it’s about better, more responsible ones. But speed without structure, or automation without ethics, is a race to the bottom. As this wave accelerates, our role as technologists, regulators, and citizens is clear: to guide AI with purpose before it guides us blindly.

The Table below summarizes key lessons, insights and examples:

The bottom line? AI isn’t a trend — it’s a turning point. Financial institutions that fail to align AI with strategy, ethics, and high-quality data risk falling behind.

The question is no longer if AI will transform your organization — it’s whether you’ll lead that transformation, or be left reacting to it.

by X⎻iO mentor Formerly Web3 Talents | AI Talents | C1 2025

Curious?

Stay tuned for more. The AI ride is a rollercoaster!